How can we speed up and improve the reliability of assessing the condition of the WEST tokamak walls after each plasma run? This is the challenge taken up by LLM4PPO, a generative multimodal AI model capable of automatically analyzing infrared images of the components facing the WEST plasma and assisting experts in protecting the first wall. This proof of concept paves the way for new decision-making tools for future experiments in ITER.

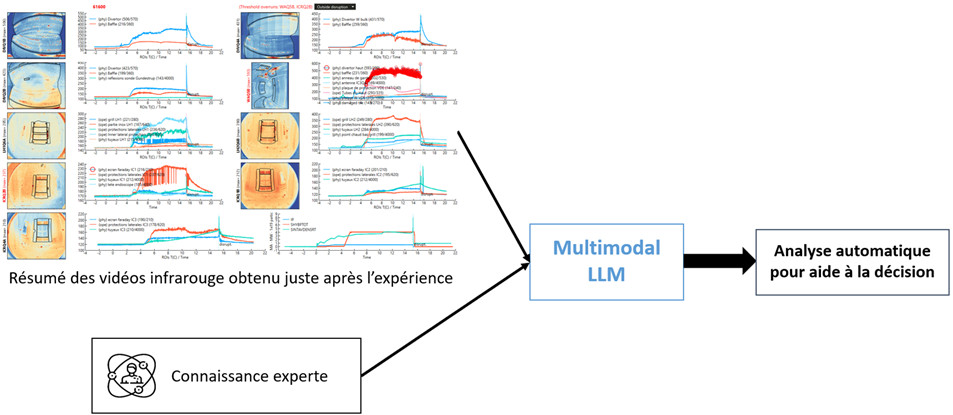

The monitoring of walls interacting with plasma within an experimental fusion facility is mainly carried out using infrared cameras. As part of a thesis carried out at CEA-IRFM, an innovative tool based on a multimodal language model, called LLM4PPO [1], was developed to automatically analyze infrared films of the WEST tokamak wall [2]. In the control room, after each plasma run, the team responsible for protecting the first wall has access to a dashboard combining infrared images and temperature curves. Interpreting this data requires specialized expertise that is difficult to formalize but essential for quickly assessing the condition of the walls and ensuring that the monitored components remain within their operating range.

Large Language Models (LLMs) are mainly used to solve various tasks in natural language processing (e.g., text translation and summarization). Multimodal LLMs complement the capabilities of traditional models by processing multimodal information such as images or audio and video formats. Thanks to recent advances in LLMs and the integration of targeted adaptation techniques such as LoRA (Low-Rank Adaptation), LLM4PPO is capable of mobilizing knowledge close to that of human experts. It can thus automatically identify and prioritize critical areas and generate an analysis report, providing near-immediate decision support at the end of the “plasma” experiment that has just been carried out (Figure 1).

During initial tests under real-world conditions, LLM4PPO achieved a satisfaction rate of 43% among experts responsible for plasma protection (PPO), with an additional 30% of responses deemed neutral. These results are all the more promising given that the model has only been trained on a single experimental campaign at this stage, representing a relatively small dataset. This is primarily a proof of concept, demonstrating the relevance of this approach for PPO decision support, while highlighting the need for learning on a larger corpus in order to improve performance.

To progress, LLM4PPO relies on a direct feedback mechanism: via a web interface, PPO experts evaluate the results generated, and this feedback is integrated into the learning database. Each new adjustment session thus enables iterative, closed-loop learning, promoting the continuous adaptation of the system to real conditions in the control room and the gradual reduction of errors.

Despite its current limitations, the model has demonstrated its ability to highlight critical areas and improve the responsiveness of PPO teams. This first application of multimodal LLM models in the field of fusion paves the way for the development of new assistance tools for wall protection. These tools will significantly accelerate ITER’s fusion ramp-up while ensuring the integrity of components exposed to plasma.

[1] – LLM4PP0 : Large Language Models (LLM) for the Plasma-facing component Protection Officer (PPO)

[2] – Gorse, Valentin and Mitteau, Raphael and Marot, Julien, Decision Support for In-Operation Monitoring of the West Tokamak First Wall Using Multimodal Large Language Model on Infrared Imaging. http://dx.doi.org/10.2139/ssrn.5177592